This article was also an Editors’ Pick on Towards Data Science.

A critical, powerful, precious resource sits mostly idle. Why?

Like a sleeping lion, the GPUs we already use have way more power than meets the eye (Photo by Dušan veverkolog on Unsplash)

In the last post, we explored how near-future business transformation is threatened by a GPU supply pinch. We know that GPU is a critical resource for rising technologies, that the expense is already a bottleneck for many organizations, and that the macro trends on the demand and supply sides for GPU (and related emerging chip designs) are pointing to a serious shortfall.

Yet over 85% of total GPU capacity sits idle. This is not only woefully inefficient financially for the companies that use GPU, but pretty shocking from an environmental perspective — the majority of global computing’s emissions, toxic chemicals, and water consumption is attributable to manufacturing processes, all of which would be cut drastically if we could do more with less. We certainly wouldn’t tolerate 85% of the gasoline we produce going to waste. So why do we tolerate GPU being used so inefficiently?

The short answer is: because (currently) we have little choice, due to the status-quo constraints of how we can connect software applications to the GPU they need.

The trend for decades in computing has been away from centralized systems and toward disaggregated services. Virtual machines, network-attached storage, software-defined networking, containers — all have contributed to today’s compute environment being more flexible and efficient than ever before.

But despite the rising criticality of accelerators in the modern data center, GPU sticks out like a sore thumb, remaining stubbornly static in its configurations.

Of course there are complex factors in play, but there is one major limitation that forces us into this inflexible model: an operating system running a GPU-hungry application is tightly leashed to that GPU by a high-throughput connection called Peripheral Component Interconnect Express, or PCIe for short.

Simply put, applications send a lot of data to their GPUs. The types of data sent depend greatly on the use case (textures, meshes, and command buffers for graphics, training datasets for machine learning, etc.), but in general the GPU must be very “close”, in networking terms, to the OS where the application is running — usually inside the same physical machine — leaving the OS environment physically tethered, usually one-to-one, to the GPU.

When an organization asks “how do our GPUs perform?”, they might run a GPU benchmark. But benchmarks are designed to push the GPU to maximum utilization — all they can tell you is the best-case performance scenario. It’s like measuring miles to the gallon while your car is coasting downhill — interesting, but not representative of reality. What you actually need to know is how much mileage you’re getting under real-world conditions.

And as we touched on in the previous post, real-world conditions for most computing workloads that run on GPUs are very “spiky” — they use a lot of GPU, then do something else for awhile, then come back and ask the GPU to work hard again, then go and do something else for awhile again.

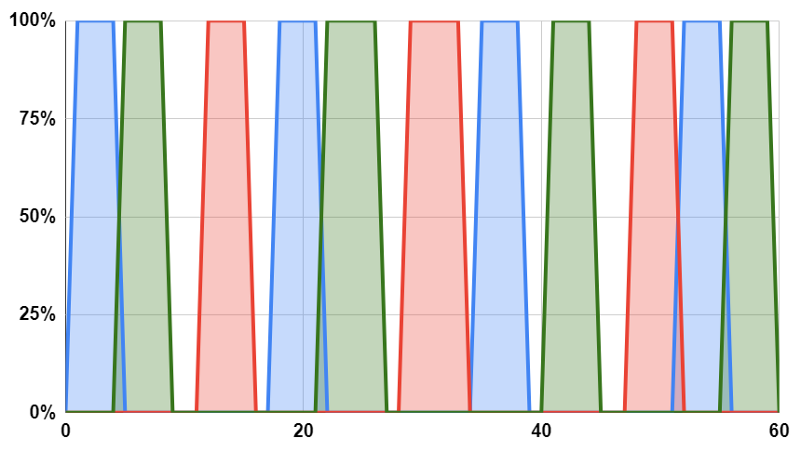

A real-world GPU utilization pattern over the course of a minute might look something like this:

So an organization might know the maximum performance of its GPUs, and might know that 100% of the number of GPUs are in use. What is less obvious is that each individual GPU is (on average) operating at below 15%, because it’s being used “selfishly” by a single physically-tethered OS instance that is exhibiting a spiky utilization pattern.

This is the hidden world of GPU inefficiency.

Pause a moment to ponder the implications of this:

Your business has decided to make a significant (and almost certainly growing) investment in high-performance computing via GPU for some purpose — either graphical (perhaps video game development or animation) or pure computing (maybe deep learning) — and has purchased or leased on-premise GPU hardware, is renting it from a cloud provider, or a hybrid of both.

But the taken-for-granted status quo selfish mode of operation, with spiky workloads serviced through a physically-handcuffed one-OS-instance-to-one-GPU model, leaves 85% of that investment wasted.

Of course, there are some existing strategies for improving GPU efficiency, and I’d be remiss if I didn’t mention them. Here it’s helpful to make a key distinction between splitting and sharing:

Splitting: cutting GPU capacity into smaller separate chunks (like MIG) and/or scheduled time chunks (using an orchestration system like Slurm), with each chunk still available to only one consuming client at a time.

Splitting doesn’t address the selfishness problem at all — any given chunk will still experience spiky utilization.

Sharing: pooling GPU capacity so it’s available to multiple consuming clients simultaneously, with clients’ workloads overlapping within the same shared GPU chunk.

Nvidia’s vGPU can do this, but all consuming clients must be VMs on the same physical machine as the GPU — again, the physical tethering problem — which severely limits the size of the pool and thus the impact on GPU utilization.

Imagine instead “true sharing” — allowing many client systems to access the many GPU chunks remotely and simultaneously, flexing up to 100% utilization of the chunk when their workloads spike but leaving the full GPU capacity available to other clients’ spikes when needed. Like this:

Just like our power grid balances capacity by pooling providers and consumers across a wide area rather than putting a power station on every block, greatly expanding the pool of clients (consumers of GPU) and servers (providers of GPU) makes it far more likely that a given unit of work can be served by a right-sized unit of hardware — letting us efficiently match supply with demand.

In this world, GPU acceleration looks much more like a simple service, or even a utility — and most importantly, is operating at close to 100% utilization.

Delivering on this vision requires two major leaps.

First, we have to break free from the PCIe tether. Though there are emerging solutions that increase the range of PCIe to expand the pool, the pool is still limited — plus these solutions require additional dedicated pricey hardware, and aren’t suited to many environments.

Thus, second, we need our solution to be software-only. This way we can deploy it ubiquitously across any operating system, any device, and any environment, for any accelerator use case, without having to sweat too many details. A software-only approach can also be trialed easily, delivered easily, and be sold at low cost compared with the substantial value created through increased utilization — so everybody wins.

We’re well on the way to delivering on both of these leaps. In my next post, I’ll explain how.

Steve Golik is the co-founder of Juice Labs, a startup with a vision to make computing power flow as easily as electricity.